Inside Writer

– 7 min read

Next-level chat apps: explainable AI, built-in RAG, and more

Introducing new capabilities that support 10M words of input, increased transparency, and dedicated modes

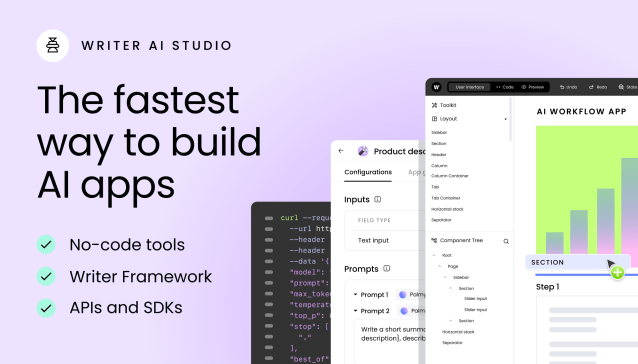

Generative AI has the power to transform work, but many enterprises are struggling to build accurate, production-ready AI apps at a pace that meets the needs of their people. In fact, a survey of 500 executives and AI professionals found that most in-house generative AI projects are mediocre or worse, as only 17% indicate they are excellent. By abstracting away the complexities of generative AI, our full-stack platform of Writer-built LLMs, graph-based RAG, AI guardrails, and development tools makes it easy for organizations like Vanguard, Intuit, L’Oreal, and Accenture to deliver impactful ROI. With Writer, enterprises can quickly build and deploy a wide range of AI apps, including text generation apps, chat apps, and more.

We’re excited to share that we’ve made a significant set of upgrades to how companies can build chat apps in Writer that make them even more powerful, transparent, and easy to use. These new capabilities include built-in RAG to analyze up to 10 million words, explainable AI features, dedicated modes, voice rewrites, custom instructions, and more. These enhancements are now available with chat apps built in AI Studio as well as Ask Writer, our prebuilt chat app available to all users.

Built-in RAG to analyze up to 10 million words

LLMs on their own, have a limited understanding of your products and business. To generate accurate and contextual insights and outputs, AI apps must have access to your most important files and documents. With chat apps, you can now upload files with up to 10 million words — the equivalent of 20,000 pages — and ask questions, conduct research, or generate outputs.

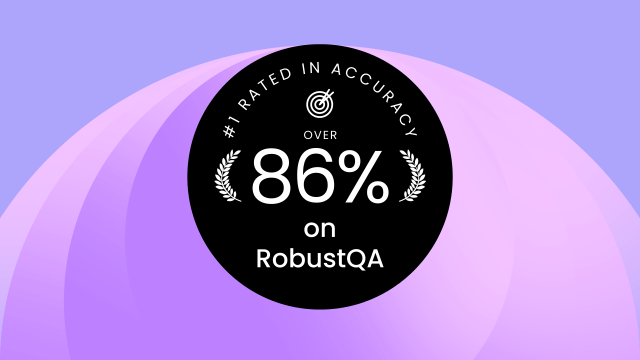

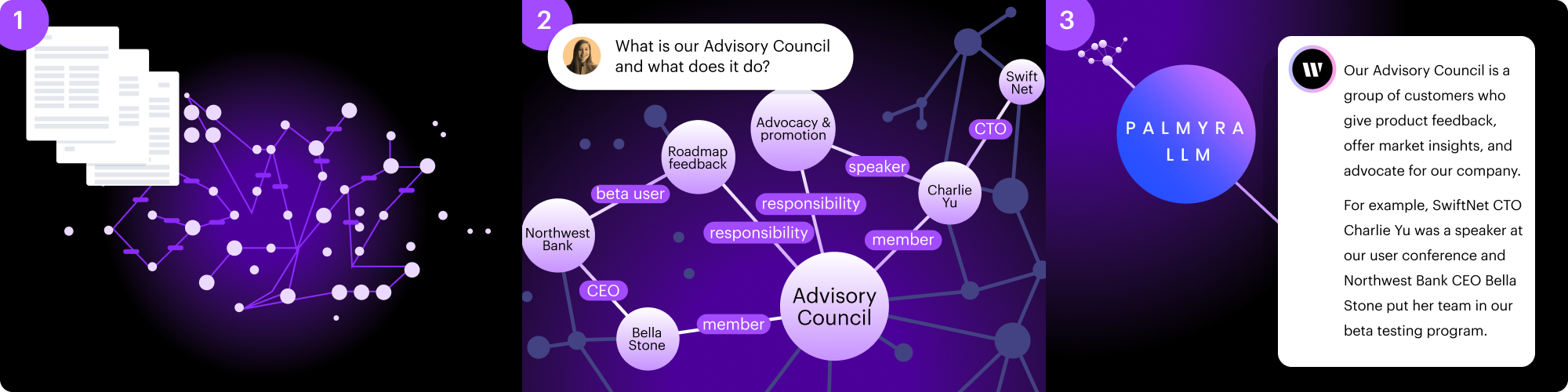

This new capability is made possible by our unique graph-based retrieval-augmented generation (RAG) technology, which also powers Writer Knowledge Graph. RAG is a natural-language technique used for question-answering. When given a question, it retrieves contextually relevant data points from a large corpus of uploaded data and passes it to LLMs to generate an accurate answer. In a recent RAG benchmarking report that evaluated eight popular RAG approaches, Writer RAG achieved first place in accuracy as measured by the RobustQA benchmark. Leading enterprises, such as Commvault, use our RAG technology to build powerful and accurate AI apps connected to their internal data and knowledge.

By building RAG directly into our chat apps, we’re empowering enterprises to tackle powerful new use cases. Here are just a few examples of what Writer customers have already taken into production:

- Company deep dives: Review financial documents and key resources to get quick insights on your prospects, such as the state of their business, industry trends, and competitive intel.

- Answering RFPs: Upload key documents and resources to easily complete new RFP requests.

- AML/KYC workflows: Analyze a customer’s financial statements, risk assessment forms, and account opening documents to complete periodic customer review reports.

- Work with long-form reports and papers: Ingest white papers, scientific research, technical documentation, and more to summarize, synthesize, or analyze the findings.

RAG delivers accuracy in knowledge retrieval

A commonly considered implementation when attempting to analyze longer documents with generative AI is to use an LLM with a larger context window. With this approach, the user’s question along with the entirety of the document is sent to the LLM’s context window as one request, and the LLM reasons and generates a response. But research has shown that accuracy tends to degrade for advanced tasks when only using LLMs with larger context windows. Our own technical experimentation validates this — when it comes to knowledge retrieval tasks, increasing the context window alone didn’t give us results that met the quality or accuracy bar that our enterprise customers expect.

While LLMs aren’t built for knowledge retrieval, RAG is. RAG is the optimal technique for question-answering tasks when you’re working with a large corpus of text and accuracy is of utmost importance. We took the same innovative graph-based RAG technology that powers our Knowledge Graph and built it directly into our chat apps. A specially-trained LLM breaks down the uploaded files into data points, maps the semantic relationship between the data points, and stores the nodes and edges in a graph structure. When you ask a question about your file, Writer retrieves the data points from the graph structure and passes it to our LLM to generate an accurate response.

New explainability and transparency features

As organizations increasingly rely on AI, they demand more explainability. Rather than blindly trusting the output from these black-box systems, users want to understand how the technology is arriving at its answers. Similarly, security and compliance teams need more AI transparency to properly assess and manage potential risks.

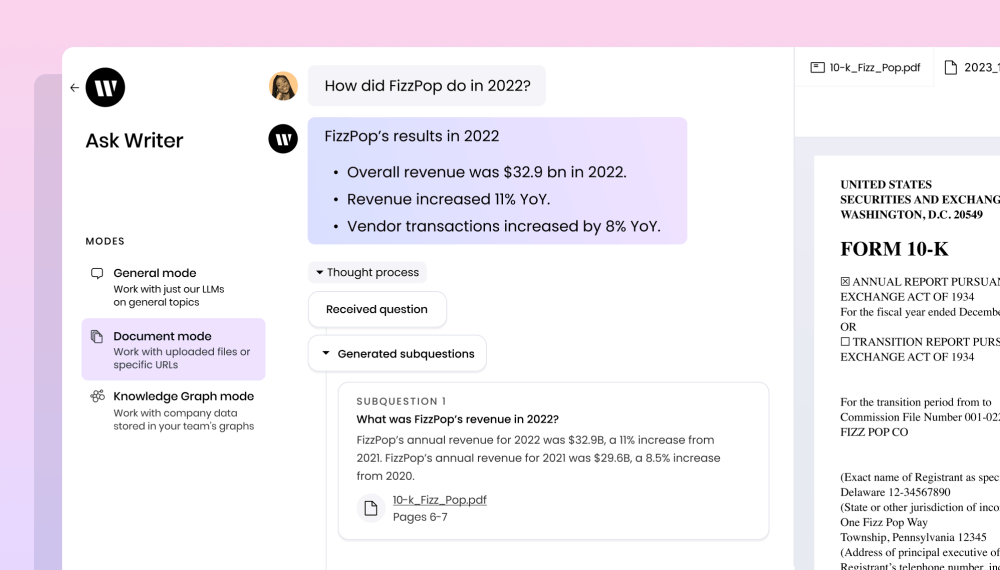

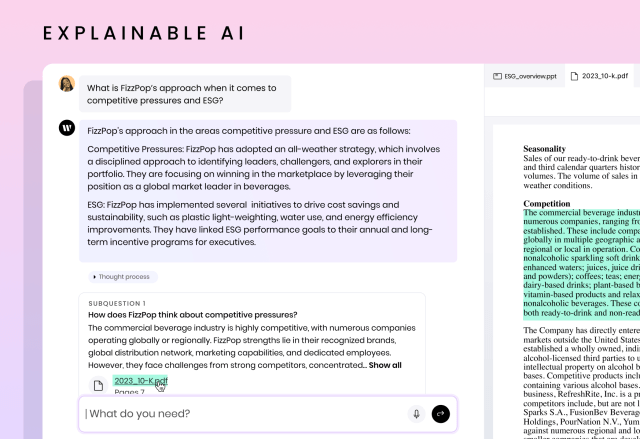

Explainable AI is crucial to building trust and confidence with our customers. In this latest update, Writer now showcases its thought process, or the steps it took to formulate a response.

When you ask a broad, vague, or complex question, our chat apps not only provide an overall answer, they also decompose your original question into subquestions and provide an answer for each, showing you the specific excerpts from contributing sources. Explainable AI not only offers transparency, but by seeing the steps taken by the AI to formulate the answer, users get a feedback loop on the efficacy of their prompts and can further refine questions for better answers.

Improved user experience for better outputs

Popular AI chat apps support a wide range of requests, but to date, they rely on one simple, open-ended interface to receive your prompt. From working with customers, we know that offering the same interface to complete all tasks, without any guidance or restrictions, doesn’t yield the best results. It places undue burden on our customers to be consistently precise and specific in their prompting instructions.

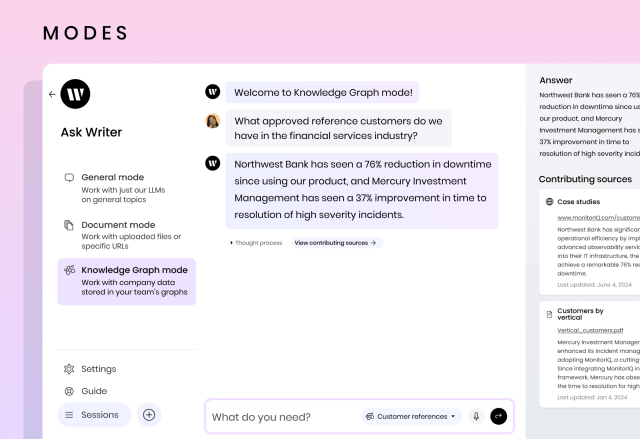

With this upgrade, we introduce “modes” — dedicated user experiences designed for specific types of tasks.

- General mode provides on-the-fly assistance from Writer-built LLMs to ideate, generate text, or get quick answers on general knowledge.

- Document mode helps you go deep on research or draft content based on a few specific files that you upload.

- Lastly, Knowledge Graph mode connects chat apps to your key company data sources, so you can ask questions and generate content based on a large corpus of information.

We’re confident that modes will make it easier for users to communicate what they’re trying to accomplish, and as a result, get higher quality outputs and answers.

In addition, we’ve incorporated a handful of additional features to make chat apps even more customizable and easy to use:

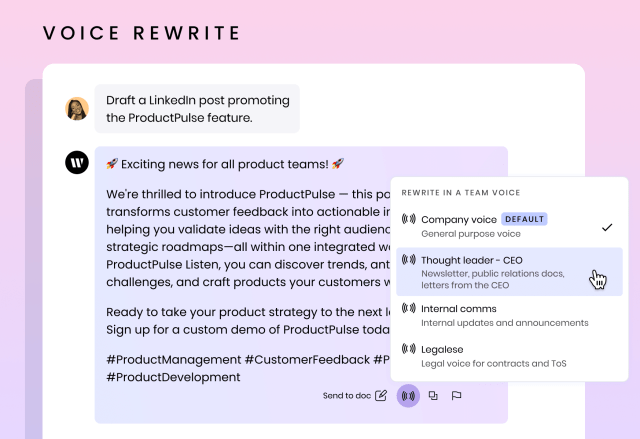

- Voice: rewrite responses in a specific tone and style, based on your team’s voice profiles.

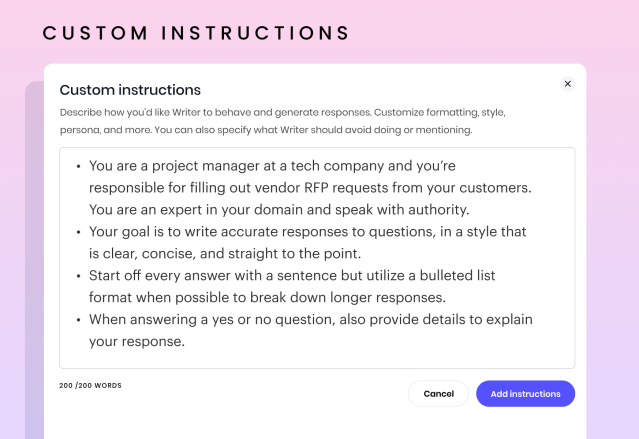

- Custom instructions: provide specific instructions for bespoke formatting, style, dos and don’ts, and more. Instead of adding these details each time you prompt, your custom instructions are automatically applied every time.

- Dictation: use spoken commands to make requests.

Enhancing accuracy, transparency, and user satisfaction

With built-in RAG, thought process, dedicated modes, and new customization features, Writer chat apps can now help you extract more valuable insights, conduct more in-depth research, and generate higher-quality output with more ease. We’re committed to continuously innovating on our full-stack generative AI platform, and we’re excited to see how these latest improvements will help our customers be more productive and successful.

To learn more about these new features, try out our interactive demo or contact a Writer sales representative.